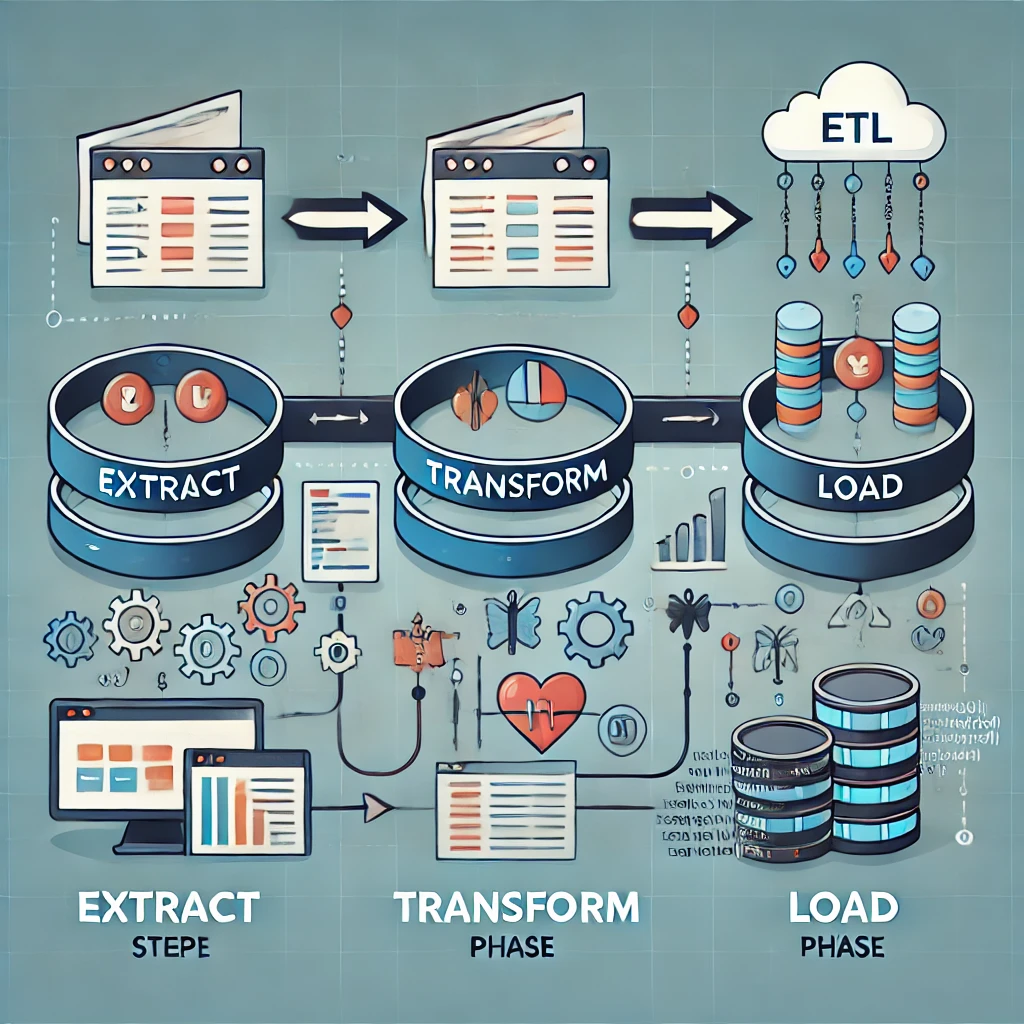

In today’s competitive retail landscape, monitoring prices across multiple retailers is essential. Web scraping plays a key role in gathering this data, but it must be efficiently managed using an ETL (Extract, Transform, Load) process to ensure accuracy and consistency. In this blog, we’ll explore how ETL is applied in market price monitoring, using grocery supermarkets as an example.

Step 1: Extracting Relevant Price Data

Web scraping allows you to pull price data from various HTML elements across multiple grocery supermarket websites. It’s crucial to capture not only the original price but also any promotional prices, special deals, bundle offers (e.g., “buy one, get one free” or bundled gifts), and the capacity or weight of the product (e.g., 500g, 1kg, 1L). This information is especially important in the grocery sector, where unit price comparison (e.g., per kg or per litre) is essential. Additionally, capturing the date when the price was scraped is vital for tracking price fluctuations over time.

Step 2: Transforming Data for Consistency

The data extracted from supermarkets often comes in unstructured text form. In this step, the raw data is cleaned and transformed into a usable format:

- Text-to-Value Conversion: Price data often appears as unstructured text (e.g., “£2.50” or “3 for £5”). It’s essential to convert this text into numerical values (e.g., £2.50 becomes 2.50) to enable analysis.

- Price Formatting and Unit Standardization: Convert prices into a consistent format by removing symbols like “£” or “p” (pence) and standardizing unit labels (e.g., kg, g, L). This ensures all prices, regardless of the unit or currency format, are uniform for accurate comparisons across retailers.

- Categorizing Product Items: Ensure grocery items are accurately categorized into main categories (e.g., fruits, vegetables, dairy) and sub-categories (e.g., apples, leafy greens, cheese) to facilitate detailed analysis.

This transformation ensures that the data is comprehensive, consistent, and ready for further analysis.

Step 3: Loading into a Trusted Database

Once transformed, the cleaned and standardized data is loaded into a reliable database. This centralized repository allows for easy management, analysis, and access to data. With the data properly stored, businesses can use various data analytics tools to track pricing trends, compare product SKUs across supermarkets, and make informed decisions. These tools enable advanced analytics, from price forecasting to consumer behavior analysis, enhancing decision-making processes.

Final Thoughts

Web scraping offers significant benefits to various stakeholders in the grocery supply chain:

- Supermarkets gain real-time insights into competitors’ prices, enabling them to adjust their pricing strategies and remain competitive.

- Suppliers can track how their products are priced across different retailers, allowing them to refine their sales strategies and optimize promotions.

- Research Firms can use the data to analyze market trends, study consumer behavior, and evaluate the effectiveness of pricing and promotional strategies.

Web scraping ensures that all key data—such as pricing, promotional offers, and product details—is captured efficiently, enabling stakeholders to make well-informed, data-driven decisions. This process, coupled with the use of data analytics tools, empowers businesses to stay ahead in an increasingly competitive and dynamic marketplace.

Leave a Reply